It was all going so well, got my Raspberry Pi and after the initial fiddle with Debian Squeeze I got another SD card and put Raspbmc on it, things were great!

Only niggle in my head was that the card I put Raspbmc on was 8GB, and that bigger card would be put to better use in my camera that was using a 4GB card. I thought it would be no problem to reformat cards and swap them over?

Wrong!

The 8GB in the camera was fine, and I used the Raspbmc installer as before to load it on the new SD card. The trouble was that when first booted up the Pi, it seemed to freeze on the

Sending HTTP request to server

No problem I thought, hop on my laptop and find out if other users experienced the same. But low and behold the internet on my laptop ceased to to work, with strange requests for proxy passwords to sites like Facebook and even the Weather gadget on Win 7!

First thoughts were that I cooked my router, as I been downloading a lot and on a warm day to (yes there was a warm day … I think!). But after it was off for as long as I could stand, powered it back on and normal service was resumed.

After rebooting all network equipment it finally dawned that the internet would go down for everything connected to my network when the Pi was powered up! I had never experienced this before and could not for the life of me fathom it out. I thought that it had a defect in the Pi meant that some sort of power surge was knocking out the system? This was quickly dismissed as local traffic was unaffected, meaning the network hardware was operating normally.

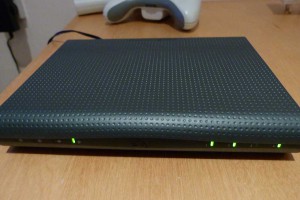

A quick glance at my Sky broadband supplied Sagem F@ST 2504 modem showed the internet connection had failed, with the internet indicator glowing orange with a red pulse every second. Stranger still, upon unplugging the Raspberry Pi, connection to the net restored within seconds!

So how can a network device have the ability to target and destroy an internet connection? Its my understanding that a Pi has no ability to retain settings other than whats stored on a SD card, but this issue continued when using two different memory cards.

Drilling down to an extreme form of troubleshooting, all network devices, including my second switch/access point was disconnected from the Sagem router. leaving just the Pi connected. Then from Midori on Debian Squeeze (remembering that the internal network was unaffected) rebooted the router using the web interface.

Suddenly the Pi could connect, attaching my whole network back together I found that everything was back to normal,

Laptop, Pi, iPhone, everything!

And this is the worst thing, I don’t know what caused this, and what I specifically did in the reboot process that solved it?

So I would love to hear if this has happened to you, and if there was something you can pinpoint as the issue? This one has got me completely stumped!